This post is mainly summarized from original PER paper, but with more detailed and illustrative explanation, we will go through key ideas and implementation of Prioritized Double DQN.

1. Prioritized Experience Replay

Online reinforcement learning (RL) agents incrementally update their parameters (of the policy, value function or model) while they observe a stream of experience. In their simplest form, they discard incoming data immediately, after a single update. Two issues with this are (a) strongly correlated updates that break the i.i.d. assumption of many popular stochastic gradient-based algorithms, and (b) the rapid forgetting of possibly rare experiences that would be useful later on.

Experience replay addresses both of these issues: with experience stored in a replay memory, it becomes possible to break the temporal correlations by mixing more and less recent experience for the updates, and rare experience will be used for more than just a single update. DQN used a large sliding window replay memory, sampled from it uniformly at random In general, experience replay can reduce the amount of experience required to learn, and replace it with more computation and more memory – which are often cheaper resources than the RL agent’s interactions with its environment.

In traditional DQN, during the experience replay process, experience transitions were uniformly sampled from a replay buffer, this approach simply replays transitions at the same frequency that they were originally experienced, regardless of their significance. Prioritized experience replay approach is developed so as to replay important transitions more frequently, and therefore learn more efficiently.

Prioritized Experience Replay focuses on how prioritizing which transitions are replayed can make experience replay more efficient and effective than if all transitions are replayed uniformly. The key idea is that an RL agent can learn more effectively from some transitions than from others. Transitions may be more or less surprising, redundant, or task-relevant. Some transitions may not be immediately useful to the agent, but might become so when the agent competence increases.

Experience Replay liberates online learning agents from processing transitions in the exact order they are experienced. Prioritized replay further liberates agents from considering transitions with the same frequency that they are experienced.

Prioritized Experience Replay proposes to more frequently replay transitions with high expected learning progress, as measured by the magnitude of their temporal-difference (TD) error. However, this prioritization can lead to a loss of diversity, which we alleviate with stochastic prioritization, and introduce bias, which is corrected with importance sampling.

2. Prioritizing with TD-error

TD-errors have also been used as a prioritization mechanism for determining where to focus resources, for example when choosing where to explore or which features to select.

The importance of each transition, one idealized criterion to measure would be the amount the RL agent can learn from a transition in its current state, which cannot be accessible directly, a reasonable proxy for this measure is the magnitude of a transition’s TD error δ, which indicates how ‘surprising’ or unexpected the transition is: specifically, how far the value is from its next-step bootstrap estimate. . This is particularly suitable for incremental, online RL algorithms, such as SARSA or Q-learning, that already compute the TD-error and update the parameters in proportion to ![]() .

.

In “greedy TD-error prioritization”, the transition with the largest absolute TD error is replayed from the memory. A Q-learning update is applied to this transition, which updates the weights in proportion to the TD error. New transitions arrive without a known TD-error, so we put them at maximal priority in order to guarantee that all experience is seen at least once.

Prioritized sampling, as the name implies, will weigh the samples so that “important” ones are drawn more frequently for training.

3. Stochastic Prioritization

Issues of greedy TD-error prioritization: to avoid expensive sweeps over the entire replay memory, transitions that have a low TD error on first visit may not be replayed for a long time; be sensitive to noise spikes; greedy prioritization focuses on a small subset of the experience (initially high error transitions get replayed frequently).

To overcome these issues, we introduce a stochastic sampling method that interpolates between pure greedy prioritization and uniform random sampling. We ensure that the probability of being sampled is monotonic in a transition’s priority, while guaranteeing a non-zero probability even for the lowest-priority transition. Concretely, we define the probability of sampling transition ![]() as

as

![]()

where ![]() is the priority of transition

is the priority of transition ![]() . The exponent

. The exponent ![]() determines how much prioritization is used, with

determines how much prioritization is used, with ![]() corresponding to the uniform case.

corresponding to the uniform case.

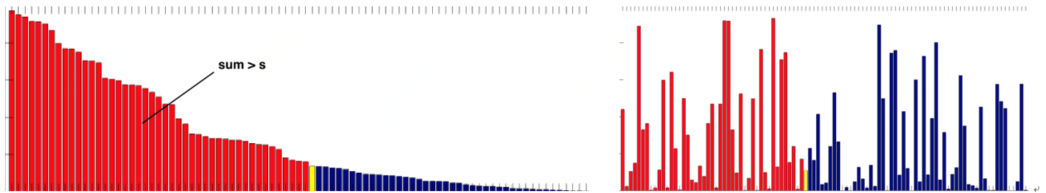

Proportional prioritization

![]()

where ![]() is a small positive constant that prevents the edge-case of transitions not being revisited once their error is zero.

is a small positive constant that prevents the edge-case of transitions not being revisited once their error is zero.

Rank-based prioritization

![]()

where ![]() is the rank of transition

is the rank of transition ![]() when the replay memory is sorted according to

when the replay memory is sorted according to ![]() . In this case,

. In this case, ![]() becomes a power-law distribution with exponent

becomes a power-law distribution with exponent ![]() . Both distributions are monotonic in

. Both distributions are monotonic in ![]() , but the latter is likely to be more robust, as it is insensitive to outliers.

, but the latter is likely to be more robust, as it is insensitive to outliers.

Priority is translated to probability of being chosen for replay. A sample ![]() has a probability of being picked during the experience replay determined by a formula (

has a probability of being picked during the experience replay determined by a formula ( ![]() ):

):

![]()

4. Bias Correction

Correct bias with important sampling.

The estimation of the expected value with stochastic updates relies on those updates corresponding to the same distribution as its expectation. Prioritized replay introduces bias because it changes this distribution in an uncontrolled fashion, and therefore changes the solution that the estimates will converge to (even if the policy and state distribution are fixed). We can correct this bias by using importance-sampling (IS) weights

![]()

that fully compensates for the non-uniform probabilities ![]() if

if ![]() . These weights can be folded into the Q-learning update by using

. These weights can be folded into the Q-learning update by using ![]() instead of

instead of ![]() (this is thus weighted IS, not ordinary IS), For stability reasons, we always normalize weights by

(this is thus weighted IS, not ordinary IS), For stability reasons, we always normalize weights by ![]() so that they only scale the update downwards.

so that they only scale the update downwards.

More explanations for the bias correction of prioritized experience replay:

After calculating ![]() , as a consequence, during each time step, we will get a batch of samples with batch probability distribution and train our network on it. But, we still have a problem here. With normal Experience Replay (deque), we use a stochastic update rule. As a consequence, the way we sample the experiences must match the underlying distribution they came from.

, as a consequence, during each time step, we will get a batch of samples with batch probability distribution and train our network on it. But, we still have a problem here. With normal Experience Replay (deque), we use a stochastic update rule. As a consequence, the way we sample the experiences must match the underlying distribution they came from.

When we have normal(deque) experience, we select our experiences in a normal distribution — simply put and then we select our experiences randomly. There is no bias, because each experience has the same chance to be taken, so we can update our weights normally. But, because we use priority sampling, purely random sampling is abandoned. As a consequence, we introduce bias toward high-priority samples (more chances to be selected).

If we would update our weights normally, we have a risk of over-fitting our agent. Samples that have high priority are likely to be used for training many times in comparison with low priority experiences (= bias). As a consequence, we’ll update our weights with only a small portion of experiences that we consider to be really interesting.

That why we use importance sampling weights (IS) that will adjust the updating by reducing the weights of the often seen samples to correct the bias.

The weights corresponding to high-priority samples have very little adjustment (because the network will see these experiences many times), whereas those corresponding to low-priority samples will have a full update.

The role of bias is to control how much these importance sampling weights affect learning. In practice, the bias parameter is annealed up to 1 over the duration of training, because these weights are more important in the end of learning when our q values begin to converge. The unbiased nature of updates is most important near convergence.

5. Efficient Implementation

To scale to large memory sizes ![]() , a binary heap data structure for the priority queue, for which finding the maximum priority transition when sampling is

, a binary heap data structure for the priority queue, for which finding the maximum priority transition when sampling is ![]() and updating priorities (with the new TD-error after a learning step) is

and updating priorities (with the new TD-error after a learning step) is ![]() .

.

Complexity analysis

How do we store the experience and effectively sample from it?

(Source: https://jaromiru.com/2016/11/07/lets-make-a-dqn-double-learning-and-prioritized-experience-replay/)

A naive implementation would be to have all samples in an array sorted according to their priorities. A random number ![]() ,

,![]() , would be picked and the array would be walked left to right, summing up a priority of the current element until the sum is exceeded and that element is chosen. This will select a sample with the desired probability distribution. But this would have a terrible efficiency:

, would be picked and the array would be walked left to right, summing up a priority of the current element until the sum is exceeded and that element is chosen. This will select a sample with the desired probability distribution. But this would have a terrible efficiency: ![]() for insertion and update and

for insertion and update and ![]() for sampling.

for sampling.

A first important observation is that we don’t have to actually store the array sorted. Unsorted array would do just as well. Elements with higher priorities are still picked with higher probability.

This releases the need for sorting, improving the algorithm to ![]() for insertion and update.

for insertion and update.

But the ![]() for sampling is still too high. We can further improve our algorithm by using a different data structure. We can store our samples in unsorted sum tree – a binary tree data structure where the parent’s value is the sum of its children. The samples themselves are stored in the leaf nodes.

for sampling is still too high. We can further improve our algorithm by using a different data structure. We can store our samples in unsorted sum tree – a binary tree data structure where the parent’s value is the sum of its children. The samples themselves are stored in the leaf nodes.

Update of a leaf node involves propagating a value difference up the tree, obtaining ![]() . Sampling follows the thought process of the array case, but achieves

. Sampling follows the thought process of the array case, but achieves ![]() . For a value

. For a value ![]() ,

,![]() , we use the following algorithm (pseudo code):

, we use the following algorithm (pseudo code):

|

1 2 3 4 5 6 7 8 |

def retrieve(n, s): if n is leaf_node: return n if n.left.val >= s: return retrieve(n.left, s) else: return retrieve(n.right, s - n.left.val) |

Following picture illustrates sampling from a tree with ![]() :

:

Implementation details see original PER paper:

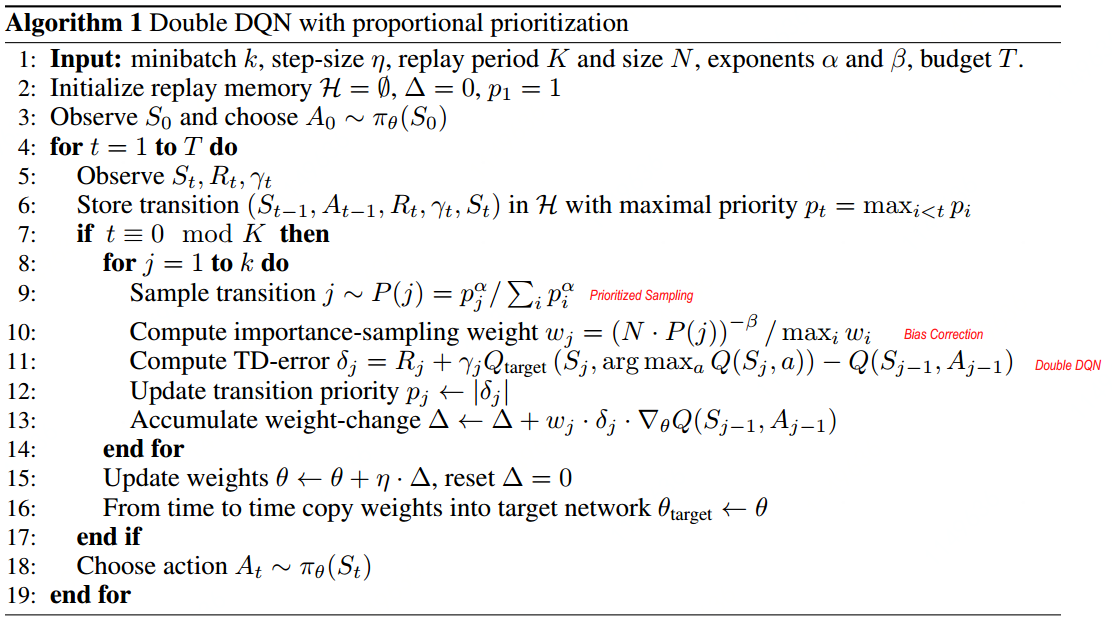

Corresponding Pseudocode is as follow:

Corresponding Pseudocode is as follow:

6. Code

Simple Cartpole Version:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 |

import gym import random import numpy as np from collections import deque import tensorflow as tf env_name = 'CartPole-v0' env = gym.make(env_name) class QNetwork(object): def __init__(self, state_dim, action_size, tau=0.01): tf.reset_default_graph() self.state_in = tf.placeholder(tf.float32, shape=[None, *state_dim]) self.action_in = tf.placeholder(tf.int32, shape=[None]) self.q_target_in = tf.placeholder(tf.float32, shape=[None]) self.importance_in = tf.placeholder(tf.float32, shape=[None]) action_one_hot = tf.one_hot(self.action_in, depth=action_size) self.q_state_local = self.build_model(action_size, 'local') self.q_state_target = self.build_model(action_size, 'target') self.q_state_action = tf.reduce_sum(tf.multiply(self.q_state_local, action_one_hot), axis=1) self.error = self.q_state_action - self.q_target_in self.loss = tf.reduce_mean(tf.multiply(tf.square(self.error), self.importance_in)) self.optimizer = tf.train.AdamOptimizer(learning_rate=0.001).minimize(self.loss) self.local_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='local') self.target_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='target') self.updater = tf.group([tf.assign(t, t + tau * (l-t)) for t,l in zip(self.target_vars, self.local_vars)]) def build_model(self, action_size, scope): with tf.variable_scope(scope): hidden1 = tf.layers.dense(self.state_in, 100, activation=tf.nn.relu) q_state = tf.layers.dense(hidden1, action_size, activation=None) return q_state def update_model(self, session, state, action, q_target, importance): feed = {self.state_in: state, self.action_in: action, self.q_target_in: q_target, self.importance_in: importance} error, _, _ = session.run([self.error, self.optimizer, self.updater], feed_dict=feed) return error def get_q_state(self, session, state, use_target=False): q_state_op = self.q_state_target if use_target else self.q_state_local q_state = session.run(q_state_op, feed_dict={self.state_in: state}) return q_state class PrioritizedReplayBuffer(object): def __init__(self, maxlen): self.buffer = deque(maxlen=maxlen) self.priorities = deque(maxlen=maxlen) def add(self, experience): self.buffer.append(experience) self.priorities.append(max(self.priorities, default=1)) def get_probabilites(self, priority_scale): scaled_priorities = np.array(self.priorities) ** priority_scale sample_probabilities = scaled_priorities / sum(scaled_priorities) return sample_probabilities def get_importance(self, probabilities): importance = 1/len(self.buffer) * 1/probabilities importance_normalized = importance / max(importance) return importance_normalized def sample(self, batch_size, priority_scale=1.0): sample_size = min(len(self.buffer), batch_size) sample_probs = self.get_probabilites(priority_scale) sample_indices = random.choices(range(len(self.buffer)), k=sample_size, weights=sample_probs) samples = np.array(self.buffer)[sample_indices] importance = self.get_importance(sample_probs[sample_indices]) return map(list, zip(*samples)), importance, sample_indices def set_priorities(self, indices, errors, offset=0.1): for i,e in zip(indices, errors): self.priorities[i] = abs(e) + offset class DoubleDQNAgent(object): def __init__(self, env): self.state_dim = env.observation_space.shape self.action_size = env.action_space.n self.q_network = QNetwork(self.state_dim, self.action_size) self.replay_buffer = PrioritizedReplayBuffer(maxlen=100000) self.gamma = 0.97 self.eps = 1.0 self.sess = tf.Session() self.sess.run(tf.global_variables_initializer()) def get_action(self, state): q_state = self.q_network.get_q_state(self.sess, [state]) action_greedy = np.argmax(q_state) action_random = np.random.randint(self.action_size) action = action_random if random.random() < self.eps else action_greedy return action def train(self, state, action, next_state, reward, done, use_DDQN=True, a=0.0): self.replay_buffer.add((state, action, next_state, reward, done)) (states, actions, next_states, rewards, dones), importance, indices = \ self.replay_buffer.sample(50, priority_scale=a) next_actions = np.argmax(self.q_network.get_q_state(self.sess, next_states, use_target=False), axis=1) q_next_states = self.q_network.get_q_state(self.sess, next_states, use_target=use_DDQN) q_next_states[dones] = np.zeros([self.action_size]) q_next_states_next_actions = q_next_states[np.arange(next_actions.shape[0]), next_actions] q_targets = reward + self.gamma * q_next_states_next_actions errors = self.q_network.update_model(self.sess, states, actions, q_targets, importance**(1-self.eps)) self.replay_buffer.set_priorities(indices, errors) if done: self.eps = max(0.1, 0.99*self.eps) def __del__(self): self.sess.close() num_runs = 10 run_rewards = [] for n in range(num_runs): print('Run {}'.format(n)) ep_rewards = [] agent = DoubleDQNAgent(env) num_episodes = 200 for ep in range(num_episodes): state = env.reset() total_reward = 0 done = False while not done: action = agent.get_action(state) next_state, reward, done, info = env.step(action) agent.train(state, action, next_state, reward, done, a=(n%2==0)*0.7) #env.render() total_reward += reward state = next_state ep_rewards.append(total_reward) print("Episode: {}, total_reward: {:.2f}".format(ep, total_reward)) run_rewards.append(ep_rewards) |

Detailed Version:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 |

import gym import os import pylab import numpy as np from collections import deque from keras.models import Model, load_model from keras.layers import Input, Dense, Lambda, Add from keras.optimizers import Adam, RMSprop from keras import backend as K import random class SumTree(object): data_pointer = 0 # initialize the tree with all nodes = 0, and initialize the data with all values = 0 def __init__(self, capacity): # Number of leaf nodes (final nodes) that will store experiences self.capacity = capacity # Generate the tree with all nodes values = 0 # To understand this calculation (2 * capacity - 1) look at the schema below # Remember in a binary node (each node has max 2 children) so 2x size of leaf (capacity) - 1 (root node) # Parent nodes = capacity - 1 # Leaf nodes = capacity self.tree = np.zeros(2 * capacity - 1) # total nodes number # Store the actual experiences here, the size of data is capacity self.data = np.zeros(capacity, dtype=object) # data/experiences store in the leaf nodes # define function that will add priority score in the sumtree leaf and add the experience in data # Adds a data to data and updates corresponding priority def add(self, priority, data): # experience index tree_index = self.data_pointer + self.capacity - 1 """tree: 0 / \ 0 0 / \ / \ tree_index 0 0 0 We fill the leaves from left to right """ # Update data frame self.data[self.data_pointer] = data # Update the sum tree with new priorities self.update(tree_index, priority) # Increment the data pointer as data is stored in left to right # Create a ring buffer with fixed capacity by overwriting new data self.data_pointer += 1 if self.data_pointer >= self.capacity: # If we're above the capacity, go back to first index (we overwrite) self.data_pointer = 0 def update(self, tree_index, priority): # Change is the update factor we need to do in sum tree change = priority - self.tree[tree_index] # Store the priority first in the tree self.tree[tree_index] = priority # Update the change to parent nodes, propagate the change through tree while tree_index != 0: tree_index = (tree_index - 1) // 2 self.tree[tree_index] += change def get_leaf(self, v): # Traverse from top of the tree parent_index = 0 while True: left_child_index = parent_index * 2 + 1 right_child_index = left_child_index + 1 # Base condition to break once tree traversal is complete if left_child_index >= len(self.tree): leaf_index = parent_index break # Find the node with maximum priority and traverse that subtree else: # downward search, always search for a higher priority node if v <= self.tree[left_child_index]: parent_index = left_child_index else: v -= self.tree[left_child_index] parent_index = right_child_index # The max priority which we could fetch for given input priority data_index = leaf_index - self.capacity + 1 # Return index of priorities, priorities, and experience return leaf_index, self.tree[leaf_index], self.data[data_index] @property def total_priority(self): return self.tree[0] # Returns the root node class Memory(object): # stored as ( state, action, reward, next_state ) in SumTree PER_e = 0.01 # Hyperparameter used to avoid some experiences to have 0 probability of being taken PER_a = 0.6 #Hyperparameter used to make a tradeoff between taking only exp with high priority and sampling randomly PER_b = 0.4 # importance-sampling, from initial value increasing to 1 PER_b_increment_per_sampling = 0.001 # b_increment_per_sampling max_error = 1.0 # clipped abs error def __init__(self, capacity): # Build a SumTree self.tree = SumTree(capacity) # define a function to store a new experience in the tree. # Each new experience will have a score of max_prority (it will be then improved when we use this exp to # train our DDQN). def store(self, experience): # New experiences are first stored in the tree with max priority max_priority = np.max(self.tree.tree[-self.tree.capacity:]) # Find the max priority #If the max priority = 0 we can't put priority = 0 since this experience will never have a chance to be selected # So we use a minimum priority if max_priority == 0: max_priority = self.max_error self.tree.add(max_priority, experience) # set the max priority for new priority # Define sample function used to pick batch from the tree memory, which will be used to train the model. # - First, sample a minibatch of n size, the range [0, priority_total] into priority ranges. # - Then a value is uniformly sampled from each range. # - Search in the sumtree, for the experience where priority score correspond to sample values are retrieved from. def sample(self, n): # Create a minibatch array that will contains the minibatch memory_b = [] b_idx = np.empty((n,), dtype=np.int32) b_ISWeights = np.empty((n, 1), dtype=np.float32) # Calculate the priority segment # Here, as explained in the paper, divide the Range[0, ptotal] into n ranges and split the total # priority into batch-sized segments priority_segment = self.tree.total_priority / n # priority segment # Scheduler for b self.PER_b = np.min([1., self.PER_b + self.PER_b_increment_per_sampling]) # max = 1 # Max weight min_prob = np.min(self.tree.tree[-self.tree.capacity:]) / self.tree.total_priority max_weight = (min_prob * n) ** (-self.PER_b) for i in range(n): # Calculate priority value for each segment to get corresponding experience a, b = priority_segment * i, priority_segment * (i + 1) v = np.random.uniform(a, b) # Experience that correspond to each value is retrieved index, priority, data = self.tree.get_leaf(v) # Fatch and normalize priority sampling_probobility = priority / self.tree.total_priority # Weight update factor depends on sampling probability of the experience and b b_ISWeights[i, 0] = np.power(n * sampling_probobility, -self.b) / max_weight # Store the index and experience b_idx[i] = index experience = data memory_b.append(experience) return b_idx, memory_b, b_ISWeights # Update the priorities on the tree def batch_update(self, tree_idx, abs_erros): abs_erros += self.PER_e # convert to abs and avoid 0 clipped_errors = np.minimum(abs_erros, self.max_error) ps = np.power(clipped_errors, self.PER_a) # For the chosen batch, depending on the error, update the priority in sum tree for ti, p in zip(tree_idx, ps): self.tree.update(ti, p) def DQNModel(input_shape, action_space, dueling): X_input = Input(input_shape) X = X_input # Input Layer of state size(4) and Hidden Layer with 512 nodes X = Dense(512, input_shape=input_shape, activation="relu", kernel_initializer='he_uniform')(X) X = Dense(256, activation="relu", kernel_initializer='he_uniform')(X) # Hidden layer with 256 nodes X = Dense(64, activation="relu", kernel_initializer='he_uniform')(X) # Hidden layer with 64 nodes if dueling: state_value = Dense(1, kernel_initializer='he_uniform')(X) state_value = Lambda(lambda s: K.expand_dims(s[:, 0], -1), output_shape=(action_space,))(state_value) action_advantage = Dense(action_space, kernel_initializer='he_uniform')(X) action_advantage = Lambda(lambda a: a[:, :] - K.mean(a[:, :], keepdims=True), output_shape=(action_space,))(action_advantage) X = Add()([state_value, action_advantage]) else: # Output Layer with # of actions: 2 nodes (left, right) X = Dense(action_space, activation="linear", kernel_initializer='he_uniform')(X) model = Model(inputs = X_input, outputs = X, name='CartPole PER D3QN model') model.compile(loss="mean_squared_error", optimizer=RMSprop(lr=0.00025, rho=0.95, epsilon=0.01), metrics=["accuracy"]) model.summary() return model class DQNAgent: def __init__(self, env_name): self.env_name = env_name self.env = gym.make(env_name) self.env.seed(0) # by default, CartPole-v1 has max episode steps = 500 # we can use this to experiment beyond 500 self.env._max_episode_steps = 4000 self.state_size = self.env.observation_space.shape[0] self.action_size = self.env.action_space.n self.EPISODES = 1000 # Instantiate memory memory_size = 10000 self.MEMORY = Memory(memory_size) self.memory = deque(maxlen=2000) self.gamma = 0.95 # discount rate # EXPLORATION HYPERPARAMETERS for epsilon and epsilon greedy strategy self.epsilon = 1.0 # exploration probability at start self.epsilon_min = 0.01 # minimum exploration probability self.epsilon_decay = 0.0005 # exponential decay rate for exploration prob self.batch_size = 32 # defining model parameters self.ddqn = True # use double deep q network self.Soft_Update = False # use soft parameter update self.dueling = True # use dealing netowrk self.epsilon_greedy = False # use epsilon greedy strategy self.USE_PER = False # use priority experienced replay self.TAU = 0.1 # target network soft update hyperparameter self.Save_Path = 'Models' self.scores, self.episodes, self.average = [], [], [] self.Model_name = os.path.join(self.Save_Path, self.env_name + "_PER_D3QN.h5") # create main model and target model self.model = DQNModel(input_shape=(self.state_size,), action_space=self.action_size, dueling=self.dueling) self.target_model = DQNModel(input_shape=(self.state_size,), action_space=self.action_size, dueling=self.dueling) # after some time interval update the target model to be same with model def update_target_model(self): if not self.Soft_Update and self.ddqn: self.target_model.set_weights(self.model.get_weights()) return if self.Soft_Update and self.ddqn: q_model_theta = self.model.get_weights() target_model_theta = self.target_model.get_weights() counter = 0 for q_weight, target_weight in zip(q_model_theta, target_model_theta): target_weight = target_weight * (1 - self.TAU) + q_weight * self.TAU target_model_theta[counter] = target_weight counter += 1 self.target_model.set_weights(target_model_theta) def remember(self, state, action, reward, next_state, done): experience = state, action, reward, next_state, done if self.USE_PER: self.MEMORY.store(experience) else: self.memory.append((experience)) def act(self, state, decay_step): # EPSILON GREEDY STRATEGY if self.epsilon_greedy: # Use an improved version of our epsilon greedy strategy for Q-learning explore_probability = self.epsilon_min + (self.epsilon - self.epsilon_min) * np.exp( -self.epsilon_decay * decay_step) # OLD EPSILON STRATEGY else: if self.epsilon > self.epsilon_min: self.epsilon *= (1 - self.epsilon_decay) explore_probability = self.epsilon if explore_probability > np.random.rand(): # Make a random action (exploration) return random.randrange(self.action_size), explore_probability else: # Get action from Q-network (exploitation) # Estimate the Qs values state # Take the biggest Q value (= the best action) return np.argmax(self.model.predict(state)), explore_probability def replay(self): if self.USE_PER: # Sample minibatch from the PER memory tree_idx, minibatch = self.MEMORY.sample(self.batch_size) else: # Randomly sample minibatch from the deque memory minibatch = random.sample(self.memory, min(len(self.memory), self.batch_size)) state = np.zeros((self.batch_size, self.state_size)) next_state = np.zeros((self.batch_size, self.state_size)) action, reward, done = [], [], [] # do this before prediction # for speedup, this could be done on the tensor level # but easier to understand using a loop for i in range(len(minibatch)): state[i] = minibatch[i][0] action.append(minibatch[i][1]) reward.append(minibatch[i][2]) next_state[i] = minibatch[i][3] done.append(minibatch[i][4]) # do batch prediction to save speed # predict Q-values for starting state using the main network target = self.model.predict(state) target_old = np.array(target) # predict best action in ending state using the main network target_next = self.model.predict(next_state) # predict Q-values for ending state using the target network target_val = self.target_model.predict(next_state) for i in range(len(minibatch)): # correction on the Q value for the action used if done[i]: target[i][action[i]] = reward[i] else: # the key point of Double DQN # selection of action is from model # update is from target model if self.ddqn: # Double - DQN # current Q Network selects the action # a'_max = argmax_a' Q(s', a') a = np.argmax(target_next[i]) # target Q Network evaluates the action # Q_max = Q_target(s', a'_max) target[i][action[i]] = reward[i] + self.gamma * (target_val[i][a]) else: # Standard - DQN # DQN chooses the max Q value among next actions # selection and evaluation of action is on the target Q Network # Q_max = max_a' Q_target(s', a') target[i][action[i]] = reward[i] + self.gamma * (np.amax(target_next[i])) if self.USE_PER: absolute_errors = np.abs(target_old[i] - target[i]) # Update priority self.MEMORY.batch_update(tree_idx, absolute_errors) # Train the Neural Network with batches self.model.fit(state, target, batch_size=self.batch_size, verbose=0) def load(self, name): self.model = load_model(name) def save(self, name): self.model.save(name) pylab.figure(figsize=(18, 9)) def PlotModel(self, score, episode): self.scores.append(score) self.episodes.append(episode) self.average.append(sum(self.scores[-50:]) / len(self.scores[-50:])) pylab.plot(self.episodes, self.average, 'r') pylab.plot(self.episodes, self.scores, 'b') pylab.ylabel('Score', fontsize=18) pylab.xlabel('Steps', fontsize=18) dqn = 'DQN_' softupdate = '' dueling = '' greedy = '' PER = '' if self.ddqn: dqn = 'DDQN_' if self.Soft_Update: softupdate = '_soft' if self.dueling: dueling = '_Dueling' if self.epsilon_greedy: greedy = '_Greedy' if self.USE_PER: PER = '_PER' try: pylab.savefig(dqn + self.env_name + softupdate + dueling + greedy + PER + ".png") except OSError: pass return str(self.average[-1])[:5] def run(self): decay_step = 0 for e in range(self.EPISODES): state = self.env.reset() state = np.reshape(state, [1, self.state_size]) done = False i = 0 while not done: # self.env.render() decay_step += 1 action, explore_probability = self.act(state, decay_step) next_state, reward, done, _ = self.env.step(action) next_state = np.reshape(next_state, [1, self.state_size]) if not done or i == self.env._max_episode_steps - 1: reward = reward else: reward = -100 self.remember(state, action, reward, next_state, done) state = next_state i += 1 if done: # every step update target model self.update_target_model() # every episode, plot the result average = self.PlotModel(i, e) print("episode: {}/{}, score: {}, e: {:.2}, average: {}".format(e, self.EPISODES, i, explore_probability, average)) if i == self.env._max_episode_steps: print("Saving trained model to", self.Model_name) # self.save(self.Model_name) break self.replay() PER_Agent = DQNAgent('CartPole-v0') PER_Agent.run() |

7. Summary

As a summary, we see the development of experience usage as shown in the following graph to refresh our knowledge of how the critical part-prioritized experience replay comes.